The Replication Network

Furthering the Practice of Replication in Economics

AoI*: “Computational Reproducibility and Robustness of Empirical Economics and Political Science Research”

[*AoI = “Articles of Interest” is a feature of TRN where we report abstracts of recent research related to replication and research integrity.]

ABSTRACT (taken from the article)

“This systematic and large-scale reproduction effort tests the reproducibility and robustness of economics and political science. We reproduced original analyses and conducted robustness checks of 110 articles recently published in leading economics and political science journals (all of which have mandatory data and code sharing policies). We found that over 85% of published claims were computationally reproducible. In robustness checks, our re-analyses lead to 72% of statistically significant estimates to remain significant and in the same direction, and the median reproduced effect size is (nearly) the same as the originally published effect size (that is, 99% of the published effect size). Additionally, six independent research teams examined 12 prespecified hypotheses about determinants of robustness. Research teams with more experience found lower levels of robustness, but robustness correlated with neither author characteristics nor data availability.”

REFERENCE

Brodeur, A., Cook, N., Mikola, D., Fiala, L., & Heyes, A. (2026). Computational Reproducibility and Robustness of Empirical Economics and Political Science Research. Nature.

REED: You Can Calculate Power Retrospectively — Just Don’t Use Observed Power

In this blog, I highlight a valid approach for calculating power after estimation—often called retrospective power. I provide a Shiny App that lets readers explore how the method works and how it avoids the pitfalls of “observed power” — try it out for yourself! I also link to a webpage where readers can enter any estimate, along with its standard error and degrees of freedom, to calculate the corresponding power.

A. Why retrospective power can be useful

Most researchers calculate power before estimation, generally to plan sample sizes: given a hypothesized effect, a significance level, and degrees of freedom, power analysis asks how large a study must be to achieve a desired probability of detection.

That’s good practice, but key inputs—variance, number of clusters, intraclass correlation coefficient (ICC), attrition, covariate performance—are guessed before the data exist, so realized (ex post) values often differ from what was planned. As Doyle & Feeney (2021) note in their guide to power calculations, “the exact ex post value of inputs to power will necessarily vary from ex ante estimates.” This is why it can be useful—even preferable—to also calculate power after estimation.

Ex-post power can be helpful in at least three situations.

1) It can provide a check on whether ex-ante power assessments were realized. Because actual implementation rarely matches the original plan—fewer participants recruited, geographic constraints on clusters, or greater dependency within clusters than anticipated—realized power often departs from planned power. Calculating ex-post power highlights these gaps and helps diagnose why they occurred.

2) It can help distinguish whether a statistically insignificant estimate reflects a negligible effect size or an imprecise estimate. In other words, it can separate “insignificant because small” from “insignificant because underpowered.”

3) It can flag potential Type M (magnitude) risk when results are significant but measured power is low. In this way, it can warn of possible overestimation and prompt more cautious interpretation (Gelman & Carlin, 2014).

In short, while ex-ante power is essential for planning, ex-post power is a practical complement for evaluation and interpretation. It connects power claims with realized outcomes, enables the diagnosis of deviations from plan, and provides additional insights when interpreting both null and significant findings.

B. Why the usual way (“Observed Power”) is a bad idea

Most statisticians advise against computing observed power, which plugs the observed effect and its estimated standard error into a power formula (McKenzie & Ozier, 2019). Because observed power is a one-to-one (monotone) transformation of the test statistic—and hence of the p-value—it adds no information and encourages tautological explanations (e.g., “the result was non-significant because power was low”).

Worse, as an estimator of a study’s design power, observed power is both biased and high variance, precisely because it treats a noisy point estimate as the true effect. These problems are well documented (Hoenig & Heisey, 2001; Goodman & Berlin, 1994; Cumming, 2014; Maxwell, Kelley, & Rausch, 2008). These concerns are not just theoretical: I demonstrate below how minor sampling variation translates into dramatic changes in observed power.

C. A better retrospective approach: SE–ES

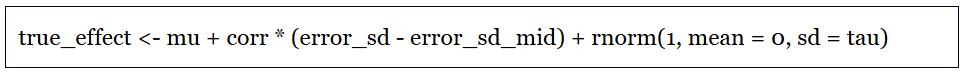

In a recent paper (Tian et al., 2024), I and my coauthors propose a practical alternative that we call: SE–ES (Standard Error–Effect Size). The idea is simple. The researcher specifies a hypothesized effect size (what would be substantively important), uses the estimated standard error from the fitted regression, and combines those with the relevant degrees of freedom to compute power for a two‑sided t‑test.

Because SE–ES fixes the effect size externally—rather than using the noisy point estimate—it yields a serviceable retrospective power number: approximately unbiased for the true design power with a reasonably tight 95% estimation interval, provided samples are not too small.

To make this concrete, suppose the data-generating process is Y=a+bX+ε , with ε a classical error term and b estimated by OLS. If the true design power is 80%, simulations at sample sizes n = 30, 50, 100 show that the SE–ES estimator is approximately unbiased, with 95% estimation intervals that tighten as n grows: (i) n = 30 yields (60%, 96%); (ii) n = 50 yields (65%, 94%); and (iii) n = 100 yields (70%, 90%).

D. Try it yourself: A Shiny app that compares SE–ES with Observed Power

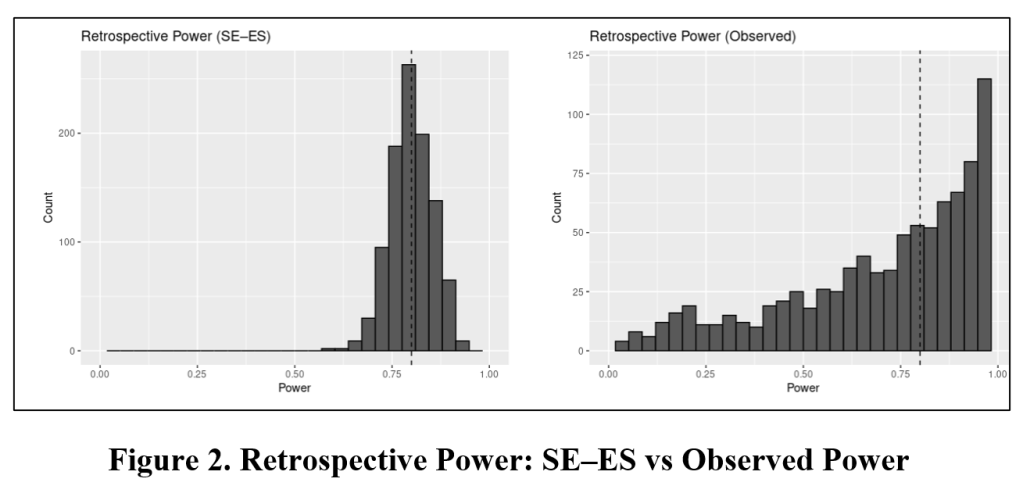

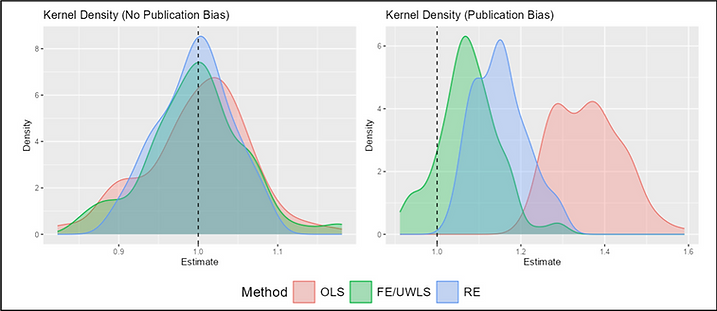

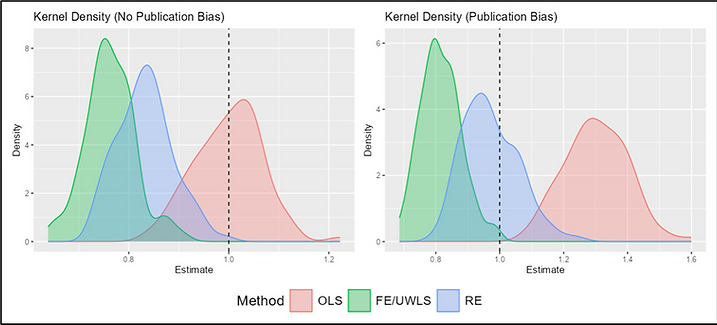

To visualize the contrast, I have created a companion Shiny app. It lets you vary sample size (n), target/true power, and α, then: (1) runs Monte Carlo replications of Y ~ 1 + βX; (2) plots side‑by‑side histograms of retrospective power for SE–ES and Observed Power; and (3) reports the Mean and the 95% simulation interval (the central 2.5%–97.5% range of simulated power values) for each method. Power is calculated under two‑tailed testing.

What you should see: the Observed Power histogram tracks the significance test—mass near 0 when results are null, near 1 when they are significant—because it is just a re‑expression of the t statistic. Further, the wide range of estimates makes it unusable even if its biasedness did not. The SE–ES histogram, in contrast, concentrates near the design’s target power and tightens as sample size grows.

To use the app, click here. Input the respective values in the Shiny app’s sidebar panel. The panel below provides an example with sample size set equal to 100; true power equal to 80% (for two-sided significance), alpha equal to 5%, and sets the number of simulations = 1000 and the random seed equal to 123.

Once you have entered your input values, click “Run simulation”. Two histograms will appear. The histogram to the left reports the distribution of estimated power values using the SE-ES method. The histogram to the right reports the same using Observed Power. The vertical dotted line indicates true power.

Immediately below this figure, the Shiny app produces a table that reports the mean and 95% estimation interval of estimated powers for the SE-ES and Observed Power methods. For this example, with the true power = 80%, the Observed Power distribution is left skewed, biased downwards (mean = 73.4%) with a 95% estimation interval of (14.5%, 99.8%). In contrast, the SE-ES distribution is approximately symmetric, approximately centered around the true of 80%, with a 95% estimation interval of (68.5%, 89.9%).

The reader is encouraged to try out different target power values and, most importantly, sample sizes. What you should see is that the SE-ES method works well at every true power value, but, in this context, it becomes less serviceable for sample sizes below 30.

E. Bottom line—and an easy calculator you can use now

Power estimation is useful for before estimation, for planning. But it is also useful after estimation, as an interpretative tool. Furthermore, it is easy to calculate. For readers interested in calculating retrospective power for their own research, Thomas Logchies and I have created an online calculator that is easy to use: click here. There you can enter α, degrees of freedom, an estimated standard error, and a hypothesized effect size to obtain SE–ES retrospective power for your estimate. Give it a go!

NOTE: Bob Reed is Professor of Economics and the Director of UCMeta at the University of Canterbury. He can be reached at bob.reed@canterbury.ac.nz.

References

Cumming, G. (2014). The new statistics: Why and how. Psychological Science, 25(1), 7–29. https://doi.org/10.1177/0956797613504966

Doyle, M.-A., & Feeney, L. (2021). Quick guide to power calculations. https://www.povertyactionlab.org/resource/quick-guide-power-calculations

Gelman, A., & Carlin, J. (2014). Beyond power calculations: Assessing type S (sign) and type M (magnitude) errors. Perspectives on Psychological Science, 9(6), 641–651. https://sites.stat.columbia.edu/gelman/research/published/retropower_final.pdf

Goodman, S. N., & Berlin, J. A. (1994). The use of predicted confidence intervals when planning experiments and the misuse of power when interpreting results. Annals of Internal Medicine, 121(3), 200–206. https://doi.org/10.7326/0003-4819-121-3-199408010-00008

Hoenig, J. M., & Heisey, D. M. (2001). The abuse of power: The pervasive fallacy of power calculations for data analysis. The American Statistician, 55(1), 19–24. https://doi.org/10.1198/000313001300339897

Maxwell, S. E., Kelley, K., & Rausch, J. R. (2008). Sample size planning for statistical power and accuracy in parameter estimation. Annual Review of Psychology, 59(1), 537–563. https://doi.org/10.1146/annurev.psych.59.103006.093735

McKenzie, D., & Ozier, O. (2019, May 16). Why ex-post power using estimated effect sizes is bad, but an ex-post MDE is not. Development Impact (World Bank Blog). https://blogs.worldbank.org/en/impactevaluations/why-ex-post-power-using-estimated-effect-sizes-bad-ex-post-mde-not

Tian, J., Coupé, T., Khatua, S., Reed, W. R., & Wood, B. D. (2025). Power to the researchers: Calculating power after estimation. Review of Development Economics, 29(1), 324-358. https://doi.org/10.1111/rode.13130

AoI*: “Introducing Synchronous Robustness Reports” by Bartos et al. (2025)

[*AoI = “Articles of Interest” is a feature of TRN where we report abstracts of recent research related to replication and research integrity.]

NOTE: The article is behind a firewall.

ABSTRACT (taken from the article)

“Most empirical research articles feature a single primary analysis that is conducted by the authors. However, different analysis teams usually adopt different analytical approaches and frequently reach varied conclusions. We propose synchronous robustness reports [SRRs] — brief reports that summarize the results of alternative analyses by independent experts — to strengthen the credibility of science.”

“To integrate SRRs seamlessly into the publication process, we suggest the framework outlined as a flowchart in Fig. 2. As the flowchart shows, the SRRs form a natural extension to the standard review process.”

REFERENCE

AoI*: “The Sources of Researcher Variation in Economics” by Huntington-Klein et al. (2025)

[*AoI = “Articles of Interest” is a feature of TRN where we report abstracts of recent research related to replication and research integrity.]

ABSTRACT (taken from the article)

“We use a rigorous three-stage many-analysts design to assess how different researcher decisions—specifically data cleaning, research design, and the interpretation of a policy question—affect the variation in estimated treatment effects.”

“A total of 146 research teams each completed the same causal inference task three times each: first with few constraints, then using a shared research design, and finally with pre-cleaned data in addition to a specified design.”

“We find that even when analyzing the same data, teams reach different conclusions. In the first stage, the interquartile range (IQR) of the reported policy effect was 3.1 percentage points, with substantial outliers.”

“Surprisingly, the second stage, which restricted research design choices, exhibited slightly higher IQR (4.0 percentage points), largely attributable to imperfect adherence to the prescribed protocol. By contrast, the final stage, featuring standardized data cleaning, narrowed variation in estimated effects, achieving an IQR of 2.4 percentage points.”

“Reported sample sizes also displayed significant convergence under more restrictive conditions, with the IQR dropping from 295,187 in the first stage to 29,144 in the second, and effectively zero by the third.”

“Our findings underscore the critical importance of data cleaning in shaping applied microeconomic results and highlight avenues for future replication efforts.”

REFERENCE

AoI*: “Decisions, Decisions, Decisions: An Ethnographic Study of Researcher Discretion in Practice” by van Drimmelen et al. (2024)

[*AoI = “Articles of Interest” is a feature of TRN where we report abstracts of recent research related to replication and research integrity.]

ABSTRACT (taken from the article)

“This paper is a study of the decisions that researchers take during the execution of a research plan: their researcher discretion. Flexible research methods are generally seen as undesirable, and many methodologists urge to eliminate these so-called ‘researcher degrees of freedom’ from the research practice. However, what this looks like in practice is unclear.”

“Based on twelve months of ethnographic fieldwork in two end-of-life research groups in which we observed research practice, conducted interviews, and collected documents, we explore when researchers are required to make decisions, and what these decisions entail.”

“Our ethnographic study of research practice suggests that researcher discretion is an integral and inevitable aspect of research practice, as many elements of a research protocol will either need to be further operationalised or adapted during its execution. Moreover, it may be difficult for researchers to identify their own discretion, limiting their effectivity in transparency.”

REFERENCE

AoI*: “Open minds, tied hands: Awareness, behavior, and reasoning on open science and irresponsible research behavior” by Wiradhany et al. (2025)

[*AoI = “Articles of Interest” is a feature of TRN where we report abstracts of recent research related to replication and research integrity.]

ABSTRACT (taken from the article)

“Knowledge on Open Science Practices (OSP) has been promoted through responsible conduct of research training and the development of open science infrastructure to combat Irresponsible Research Behavior (IRB). Yet, there is limited evidence for the efficacy of OSP in minimizing IRB.”

“We asked N=778 participants to fill in questionnaires that contain OSP and ethical reasoning vignettes, and report self-admission rates of IRB and personality traits. We found that against our initial prediction, even though OSP was negatively correlated with IRB, this correlation was very weak, and upon controlling for individual differences factors, OSP neither predicted IRB nor was this relationship moderated by ethical reasoning.”

“On the other hand, individual differences factors, namely dark personality triad, and conscientiousness and openness, contributed more to IRB than OSP knowledge.”

“Our findings suggest that OSP knowledge needs to be complemented by the development of ethical virtues to encounter IRBs more effectively.”

REFERENCE

RÖSELER: Replication Research Symposium and Journal

Efforts to teach, collect, curate, and guide replication research are culminating in the new diamond open access journal Replication Research, which will launch in late 2025. The Framework for Open and Reproducible Research Training (FORRT; forrt.org) and the Münster Center for Open Science have spearheaded several initiatives to bolster replication research across various disciplines. From May 14-16, 2025, we are excited to invite researchers to join us in Münster, as well as online, for the Replication Research Symposium. This event will mark a significant step toward the launch of our interdisciplinary journal dedicated to reproductions, replications, and discussions on the methodologies involved. But let’s start from the beginning: What is going on at FORRT?

Finding and exploring replications: FORRT Replication Database (FReD) includes hundreds of replication studies and thousands of replication findings – which we define as tests of previously established claims using different data. Researchers can use the Annotator to have their reference lists auto-checked to see whether they cited original studies that have been replicated. With the Explorer, they get an overview of all studies and can analyze replication rates across different success criteria or moderator variables.

Meta-analyzing replication outcomes: To increase the accessibility of the database, we created the FReD R-package with which researchers can run their own analyses or run the ShinyApps locally. In a vignette, we outline different replication success criteria and show how this choice can affect the overall replication success rate.

Teaching replications: One of FORRT’s core ideas is to support researchers from all fields to learn about openness and reproducibility. Among numerous projects, we clarified terminology (Glossary of Open Science Terms), produced educational materials such as an educationally-driven review paper on the transformative impact of the replication crisis, syllabus and slides with lecture and pedagogical notes (see Educational toolkit), and curated resources. We are also now working together with experts from economics, psychology, medicine, and other fields to create an interdisciplinary guide to carrying out replications and reproductions.

Publishing replication studies and discussing standards across fields: We are currently developing the journal Replication Research, a diamond open-access outlet for replication and reproduction studies and discussions about the respective methods. There will be reproducibility checks for all published studies and standardized machine-readable templates that authors are encouraged to use. We are currently building the journal with a network of 20 experts from different fields. From February until April, we are organizing the Road to Replication Research via Zoom. This online discussion series is centered around different aspects of open and responsible scientific publishing and is open to anybody who wants to join the conversation, so that the journal is maximally open from the start. Finally, at the Replication Research Symposium, participants and experts from diverse fields such as psychology, economics, biology, medicine, marketing, meta-science, library science, humanities, and others will convene to discuss the significance and methodology of conducting replication and reproduction studies over three days in May 2025. This symposium will further shape Replication Research and we invite researchers from all fields to present their replications, reproductions, or methodological discussions. The journal launch is then slated for late 2025.

For more information about Replication Research, the upcoming symposium, and the online discussion series about the creation of the journal click here.

Lukas Röseler is the managing director of the Münster Center for Open Science at the University of Münster, one of the project leads at FORRT’s Replication Hub, and will be the managing editor of Replication Research. He can be contacted at lukas.roeseler@uni-muenster.de.

ROODMAN: Appeal to Me – First Trial of a “Replication Opinion”

Posted on by replicationnetwork

Leave a Comment

[This blog is a repost of a blog that first appeared at davidroodman.com. It is republished here with permission from the author.]

My employer, Open Philanthropy, strives to make grants in light of evidence. Of course, many uncertainties in our decision-making are irreducible. No amount of thumbing through peer-reviewed journals will tell us how great a threat AI will pose decades hence, or whether a group we fund will get a vaccine to market or a bill to the governor’s desk. But we have checked journals for insight into many topics, such as the odds of a grid-destabilizing geomagnetic storm, and how much building new schools boosts what kids earn when they grow up.

When we draw on research, we vet it in rare depth (as does GiveWell, from which we spun off). I have sometimes spent months replicating and reanalyzing a key study—checking for bugs in the computer code, thinking about how I would run the numbers differently and how I would interpret the results. This interface between research and practice might seem like a picture of harmony, since researchers want their work to guide decision-making for the public good and decision-makers like Open Philanthropy want to receive such guidance.

Yet I have come to see how cultural misunderstandings prevail at this interface. From my side, there are two problems. First, about half the time I reanalyze a study, I find that there are important bugs in the code, or that adding more data makes the mathematical finding go away, or that there’s a compelling alternative explanation for the results. (Caveat: most of my experience is with non-randomized studies.)

Second, when I send my critical findings to the journal that peer-reviewed and published the original research, the editors usually don’t seem interested (recent exception).

Seeing the ivory tower as a bastion of truth-seeking, I used to be surprised. I understand now that, because of how the academy works, in particular, because of how the individuals within academia respond to incentives beyond their control, we consumers of research are sometimes more truth-seeking than the producers.

Last fall I read a tiny illustration of the second problem, and it inspired me to try something new. Dartmouth economist Paul Novosad tweeted his pique with economics journals over how they handle challenges to published papers:

As you might glean from the truncated screenshots, the starting point for debate is a paper published in 2019. It finds that U.S. immigration judges were less likely to grant asylum on warmer days. For each 10°F the temperature went up, the chance of winning asylum went down 1 percentage point.

The critique was written by another academic. It fixes errors in the original paper, expands the data set, and finds no such link from heat to grace. In the rejoinder, the original authors acknowledge errors but say their conclusion stands. “AEJ” (American Economic Journal: Applied Economics) published all three articles in the debate. As you can see, the dueling abstracts confused even an expert.

So I appointed myself judge in the case. Which I’ve never seen anyone do before, at least not so formally. I did my best to hear out both sides (though the “hearing” was reading), then identify and probe key points of disagreement. I figured my take would be more independent and credible than anything either party to the debate could write. I hoped to demonstrate and think about how academia sometimes struggles to serve the cause of truth-seeking. And I could experiment with this new form as one way to improve matters.

I just filed my opinion, which is to say, the Institute for Replication has posted it. (Open Philanthropy partly funds them.) My colleague Matt Clancy has pioneered living literature reviews; he suggested that I make this opinion a living document as well. If either party to the debate, or anyone else, changes my mind about anything in the opinion, I will revise it while preserving the history.

Verdict

My conclusion was more one-sided than I had expected. I came down in favor of the commenter. The authors of the original paper defend their finding by arguing that in retrospect they should have excluded the quarter of their sample consisting of asylum applications filed by people from China. Yes, they concede, correcting the errors mostly erases their original finding. But it reappears after Chinese are excluded.

This argument did not persuade me. True, during the period of this study, 2000–04, most Chinese asylum-seekers applied under a special U.S. law meant to give safe harbor to women fearing forced sterilization and abortion in their home country.

The authors seem to argue that because grounds for asylum were more demonstrable in these cases—anyone could read about the draconian enforcement of China’s one-child policy—immigration judges effectively lacked much discretion. And if outdoor temperature couldn’t meaningfully affect their decisions, the cases were best dropped from a study of precisely that connection.

But this premise is flatly contradicted by a study the authors cite called “Refugee Roulette.” In the study, Figure 6 shows that judges differed widely in how often they granted asylum to Chinese applicants. One did so less than 5% of the time, another more than 90%, and the rest were spread evenly between. (For a more thorough discussion, read sections 4.4 and 6.1 of my opinion.)

Thus while I do not dispute that there is a correlation between temperature and asylum grants in a particular subset of the data, I think it is best explained by p-hacking or some other form of “filtration,” in which, consciously or not, researchers gravitate toward results that happen to look statistically significant. (In fairness, they know that peer reviewers, editors, and readers gravitate to the same sorts of results, and getting a paper into a good journal can make a career.)

The nature of the defense raises a question about how the journal handled the dispute. It published the original authors’ rejoinder as a Correction. Yet, while one might agree that it is better to exclude Chinese from the analysis, I think their inclusion in the original was not an error, and therefore their exclusion is not a correction. Thus, one way the journal might have headed off Novosad’s befuddlement would have been by insisting that Corrections only make corrections.

What’s wrong with this picture?

To recap:

– Two economists performed a quantitative analysis of a clever, novel question.

– It underwent peer review.

– It was published in one of the top journals in economics. Its data and computer code were posted online, per the journal’s policy

– Another researcher promptly responded that the analysis contains errors (such as computing average daytime temperature with respect to Greenwich time rather than local time), and that it could have been done on a much larger data set (for 1990 to ~2019 instead of 2000–04). These changes make the headline findings go away.

– After behind-the-scenes back and forth among the disputants and editors, the journal published the comment and rejoinder.

– These new articles confused even an expert.

– An outsider (me) delved into the debate and found that it’s actually a pretty easy call.

If you score the journal on whether it successfully illuminated its readership as to the truth, then I think it is kind of 0 for 2.

[Update: I submitted the opinion to the journal, which promptly rejected it. I understand that the submission was an odd duck. But if I’m being harsh I can raise the count to 0 for 3.]

That said, AEJ Applied did support dialogue between economists that eventually brought the truth out. In particular, by requiring public posting of data and code (an area where this journal and its siblings have been pioneers), it facilitated rapid scrutiny.

Still, it bears emphasizing: For quality assurance, the data sharing was much more valuable than the peer review. And, whether for lack of time or reluctance to take sides, the journal’s handling of the dispute obscured the truth.

My purpose in examining this example is not to call down a thunderbolt on anyone, from the Olympian heights of a funding body. It is rather to use a concrete story to illustrate the larger patterns I mentioned earlier.

Despite having undergone peer review, many published studies in the social sciences and epidemiology do not withstand close scrutiny. When they are challenged, journal editors have a hard time managing the debate in a way that produces more light than heat.

I have critiqued papers about the impact of foreign aid, microcredit, foreign aid, deworming, malaria eradication, foreign aid, geomagnetic storm risk, incarceration, schooling, more schooling, broadband, foreign aid, malnutrition, ….

Many of those critiques I have submitted to journals, typically only to receive polite rejections. I obviously lack objectivity. But it has struck me as strange that, in these instances, we on the outside of academia seem more concerned about getting to the truth than those on the inside. Sometimes I’ve wished I could appeal to an independent authority to review a case and either validate my take or put me in my place.

That yearning is what primed me to respond to Novosad’s tweet by donning the robe of a judge myself. (I passed on the wig.)

I’ve never edited a journal, but I’ve talked to people who have, and I have some idea of what is going on. Editors juggle many considerations besides squeezing maximum truth juice out of any particular study. Fully grasping a replication debate takes work—imagine the parties lobbing 25-page missives at each other, dense with equations, tables, and graphs—and editors are busy.

Published comments don’t get cited much anyway, and editors keep an eye on how much their journals get cited. They may also weigh the personal costs for the people whose reputations are at stake. Many journals, especially those published by professional associations, want to be open to all comers—to be the moderator, not the panelist, the platform, not the content provider.

The job they set for themselves is not quite to assess the reliability of any given study (a tall order) but to certify that each article meets a minimum standard, to support the collective dialogue through which humanity seeks scientific truth.

Then, too, I think journal editors often care a lot about whether a paper makes a “contribution” such as a novel question, data source, or analytical method. Closer to home, junior editors may think twice before welcoming criticism that could harm the reputation of their journal or ruffle the feathers of more powerful members of their flock. Senior editors may have gotten where they are by thinking in the same, savvy way.

Modern science is the best system ever developed for pursuing truth. But it is still populated by human beings (for how much longer?) whose cognitive wiring makes the process uncomfortable and imperfect. Humans are tribal creatures—not as wired for selflessness as your average ant, but more apt to go to war than an eel or an eagle.

Among the bits of psychological glue that bind us are shared ideas about “is” and “ought.” Imperialists and evangelists have long influenced shared ideas in order to expand and solidify the groups over which they hold sway. The links between belief, belonging, and power are why the notion that evidence trumps belief was so revolutionary when the Roman church sent Galileo to his death, and why the idea, despite making modernity possible, remains discomfiting to this day.

The inefficiency in pursuing truth has real costs for society. Some social science research influences decisions by private philanthropies and public agencies, decisions whose stakes can be measured in human lives, or in the millions, billions, even trillions of dollars. Yet individual studies receive perhaps hundreds of dollars worth of time in peer review, and that within a system in which getting each paper as close as possible to the truth is one of several competing priorities.

Making science work better is the stuff of metascience, an area in which Open Philanthropy makes grants. It’s a big topic. Here, I’ll merely toss out the idea that if these new-fangled replication opinions were regularly produced, they could somewhat mitigate the structural deprecation of truth-seeking.

On the demand side—among decision-makers using research—replication opinions could improve the vetting of disputed studies, while efficiently targeting the ones that matter most. (Related idea here.)

On the supply side, a heightened awareness that an “appeals court” could upstage journals in a role laypeople and policymakers expect them to fill—performing quality assurance on what they publish—could stimulate the journals to handle replication debates in a way that better serves their readers and society.

Reflections on writing the replication opinion

Writing a novel piece led me to novel questions. To prepare for writing my opinion, I read about how judges write theirs. Judicial opinions usually have a few standard sections. They review the history of the case (what happened to bring it about, what motions were filed); list agreed facts; frame the question to be decided; enunciate some standard that a party has to meet, perhaps handed down by the Supreme Court; and then bring the facts to the standard to reach a decision.

Could I follow that outline? Reviewing the case history was easy enough. I had the papers and could inventory their technical points. The data and computer code behind the papers are on the web, so I could rerun the code and stipulate facts such as that a particular statistical procedure applied to a particular data set generates a particular output.

Figuring out what I was trying to judge was harder. Surely it was not whether, for all people, places, and times, heat makes us less gracious. Nor should I try to decide that question even in the study’s context, which was U.S. asylum cases decided between 2000 and 2004.

Truth in the social sciences is rarely absolute. We use statistics precisely because we know that there is noise in every measurement, uncertainty in every finding. In addition, by Bayes’ Rule, the conclusions we draw from any one piece of evidence depend on the prior knowledge we bring to it, which is shaped by other evidence.

Someone who has read 10 ironclad articles on how temperature affects asylum decisions should hardly be moved by one more. Yet I think those 10 other studies, if they existed, would lie beyond the scope of this case.

That means that my replication opinion is not about the effects of temperature on behavior in any setting. It’s more meta than that. It’s about how much this new paper should shift or strengthen one’s views on the question.

After reflecting on these complications, here is what I decided to decide: to the extent that a reasonable observer updated their priors after reading the original paper, how much should the subsequent debate reverse or strengthen that update?

My judgment need not have been binary. Unlike a jury burdened with deciding guilt or innocence, a replication opinion can come down in the middle, again by Bayes’ Rule. Sometimes there is more than one reasonable way to run the numbers and more than one reasonable way to interpret the results.

I sought rubrics through which to organize my discussion—both to discipline my own reasoning and to set precedents, should I or anyone else do this again. I borrowed a typology developed by former colleague Michael Clemens of the varieties of replication and robustness testing, as well as a typology of statistical issues from Shadish, Cook, and Campbell.

And I made a list of study traits that we can expect to be associated, on average, with publication bias and other kinds of result filtration. For example, there is evidence that in top journals, statistical results from junior economists, who are running the publish-or-perish gauntlet toward tenure, are more likely to report results that just clear conventional thresholds for statistical significance. That is consistent with the theory that the researchers on whom the system’s perverse incentives impinge most strongly are most apt to run the numbers several ways and emphasize the “significant” runs in their write-ups.

One tricky issue was how much I should analyze the data myself. The upside could be more insight. The downside could be a loss of (perceived) objectivity if the self-appointed referee starts playing the game. Wisely or not, I gave myself some leeway here. Surely real judges also rely on their knowledge about the world, not just what the parties submit as evidence.

For example, in addition to its analysis of asylum decisions, the original paper checks whether the California parole board was less likely to grant parole on warmer days in 2012–15. Partly because the critical comment did not engage with this side-analysis, I revisited it myself. I transferred it to the next quadrennium, 2016–19, while changing the original computer code as little as possible. (Here, too, the apparent impact of temperature went away.)

Closing statement

The stakes in this case are probably low. While the question of how temperature affects human decision-making links broadly to climate change, and the arbitrariness of the American immigration system is a serious concern, I would be surprised if any important policy decision in the next few years turns on this research.

But the case illustrates a much larger problem. Some studies do influence important decisions. That they have been peer-reviewed should hardly reassure. Judicious post-publication review of important studies, perhaps including “replication opinions,” can give decision-makers with real dollars and real livelihoods on the line a clearer sense of what the data do and do not tell us.

Unfortunately, powerful incentives within academia, rooted in human nature, have generally discouraged such Socratic inquiry.

I like to think of myself as judicious. As to whether I’ve lived up to my self-image in this case, I will let you be the judge. At any rate, I figure that in the face of hard problems, it is good to try new things. We will see if this experiment is replicated, and if that does much good.

David Roodman is Senior Advisor at Open Philanthropy. He can be contacted at david@davidroodman.com

Category: GUEST BLOGS Tags: Academic incentives, Comments, economics, Evidence-based policy, Journal policy, Meta-Science, Open Philanthropy, peer review, replications, Truth-seeking